If it could, would we want it to? Suppose humanity managed to automate everything. Would this lead to a fairer, more equal society – not prejudiced on individual intelligence, physical strength or appearance, class, gender, race, …? How would everybody cope without an occupation, would there be a struggle for meaning? What risks and impact are inherent in creating an Artificial Intelligence that, ultimately, may be able to surpass our own intelligence, by recursively improving itself? Would AI and humanities interests remain aligned – if not, would this be humanities worst mistake (from its own perspective)? I don’t know the answers to these questions and won’t speculate now – should and could are fundamentally different questions.

The short answer is yes, I think it will be possible, at some time in the future, for Artificial Intelligence to replace clinicians. The same is true of many other professional classifications, regardless of physical or intellectual demand. However, this is not possible today.

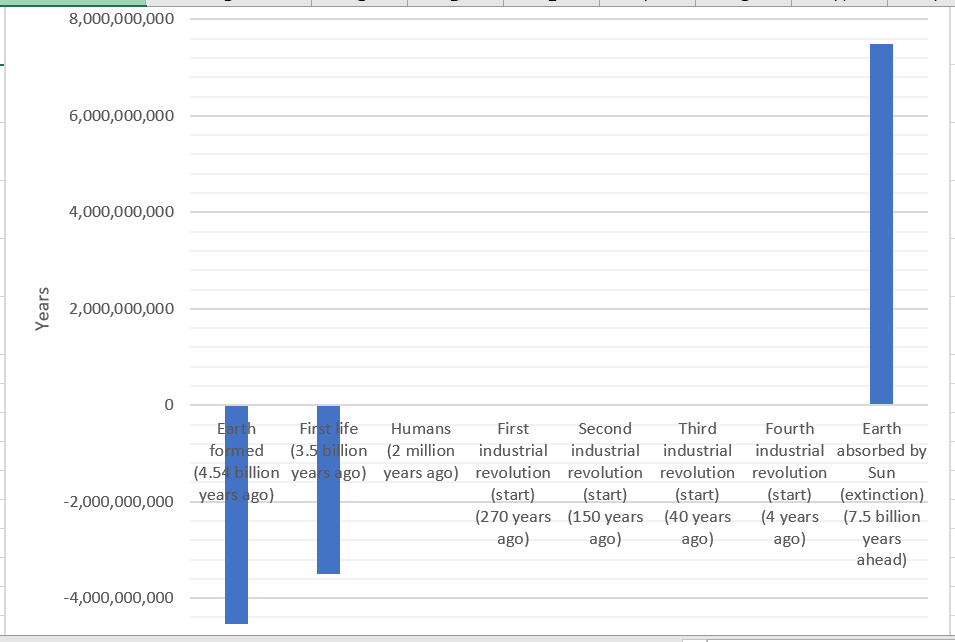

If not now, then when? AI and robotics is the subject of the fourth industrial revolution – whilst this is still, primarily, in an R&D phase, some initial forms of these technologies are already becoming economically and commercially viable. Consider this timeline, which is designed to illustrate the exponential increase in the rate of (global) change.

Assuming we don’t ruin the planet we’re living on, kill ourselves with nuclear or biological weapons or fall foul to some other existential threat (either of our own making or not), then we’ve also got quite a bit of time left on this planet (before we need to figure out how to survive as an extraterrestrial species). Given the amount of change in the last 270 years and, the exponential increase in the pace of change in the last 40 years, I don’t think this will be a long time coming.

Based on four polls, conducted in 2012 and 2013 and referenced by Wikipedia’s article on the Technological Singularity (the hypothesis of Vernor Vinge), the median estimate was a 50% chance that artificial general intelligence (AGI) would be developed by 2040–2050.

The Future of Life Institute has been set up to mitigate existential risks facing humanity,

If we allow AI to continue, how accountable is it in our society? The European Union is debating AI personhood – should machines take on legal status and bear ultimate responsibility for their actions? If not, who is accountable? The manufacturer? The owner?

To further complicate things, perhaps there will not be a clear black and white line between humans and Artificial Intelligence. Do we have the right to self-modification (aka hacking)? What is the difference between searching the Internet on a laptop, asking Siri a question and embedding the same technology into our bodies? Are we already hacking our bodies with laser eye surgery and cosmetic surgery? What about genetic modification, is it right to move beyond CRISPR in order to genetically modify the human genome for other purposes (e.g. to become stronger, more intelligent or attractive)? Is this the next stage of human evolution? If so, will our children need to do this as part of their survival to be the fittest? Would you hack your own body?

Please let me know your thoughts!

I think the development of AI clinicians is almost inevitable. It is more a question of how soon will we start seeing them. I would have said that the physical problem is more likely the limiting factor. However, having seen how fast Boston Dynamics is developing its terrifying robots it’s not hard to imagine and near future, where patients are pinned to the bed by four dog bots using their “5 degree-of-freedom” arms protruding from where their heads should be, while a parkour enabled Atlas robot leans over and booms ‘calm down’ in the voice of Stephen Hawkin.

My guess is we will first start seeing these things in scenarios where they make most economic sense. Scenarios such as hostile environments like the front line or extremely remote outposts or even off-world habitats like the space station. Let’s face it, the US army are going to buy these long before the NHS does. Assuming the NHS still exists and the US army doesn’t solely consist of T-800 series terminators.

There are so many ethical dilemmas to address that I can’t see how we could possibly proceed without having to concede some aspects of what is socially acceptable today. AI progress will outstrip public debate and the legal system. Society will put up with a few AI road deaths as long as their car is still able to come and get them from the pub on a cold night.

There is also the interesting problem of using neural networks where you may not necessarily be able to tell how a decision was arrived at, just that this was the best choice for the desired outcome given the data. Hopefully, this outcome is something the supplier, analyst, doctor, family, and the patient would agree on, but probably not. So there are some interesting questions around how we control AI if we are even able to. You’ve seen I, Robot, you know what is coming.

Will an AI Clinician be more accurate? probably. Will an AI Clinician be more economical? yes, eventually. Will an AI Clinician be impartial? probably not given the biases in AI that we are already seeing. Would being treated by an AI clinician be uncomfortable or unpleasant? yes, but our AI overlords will probably be giving us far more to worry about.